Should AI Be Allowed to Kill? Ethics of Autonomous Weapons

Affiliate Disclosure

Some of the links on this blog are affiliate links. This means that if you click on a link and make a purchase, I may earn a commission at no additional cost to you. I only recommend products and services I genuinely believe in. Thank you for your support!

The Rise of Autonomous Weapons

Artificial intelligence is transforming modern warfare. From drone strikes to automated defense systems, AI-powered machines can now identify targets, assess threats, and even launch attacks—with little or no human intervention.

These technologies are called Lethal Autonomous Weapons Systems (LAWS). Unlike traditional weapons, they can decide when and whom to kill based on sensor input and algorithms.

That raises one of the most disturbing questions in the AI ethics debate:

Should we allow machines to take human lives?

Examples of AI in Military Use

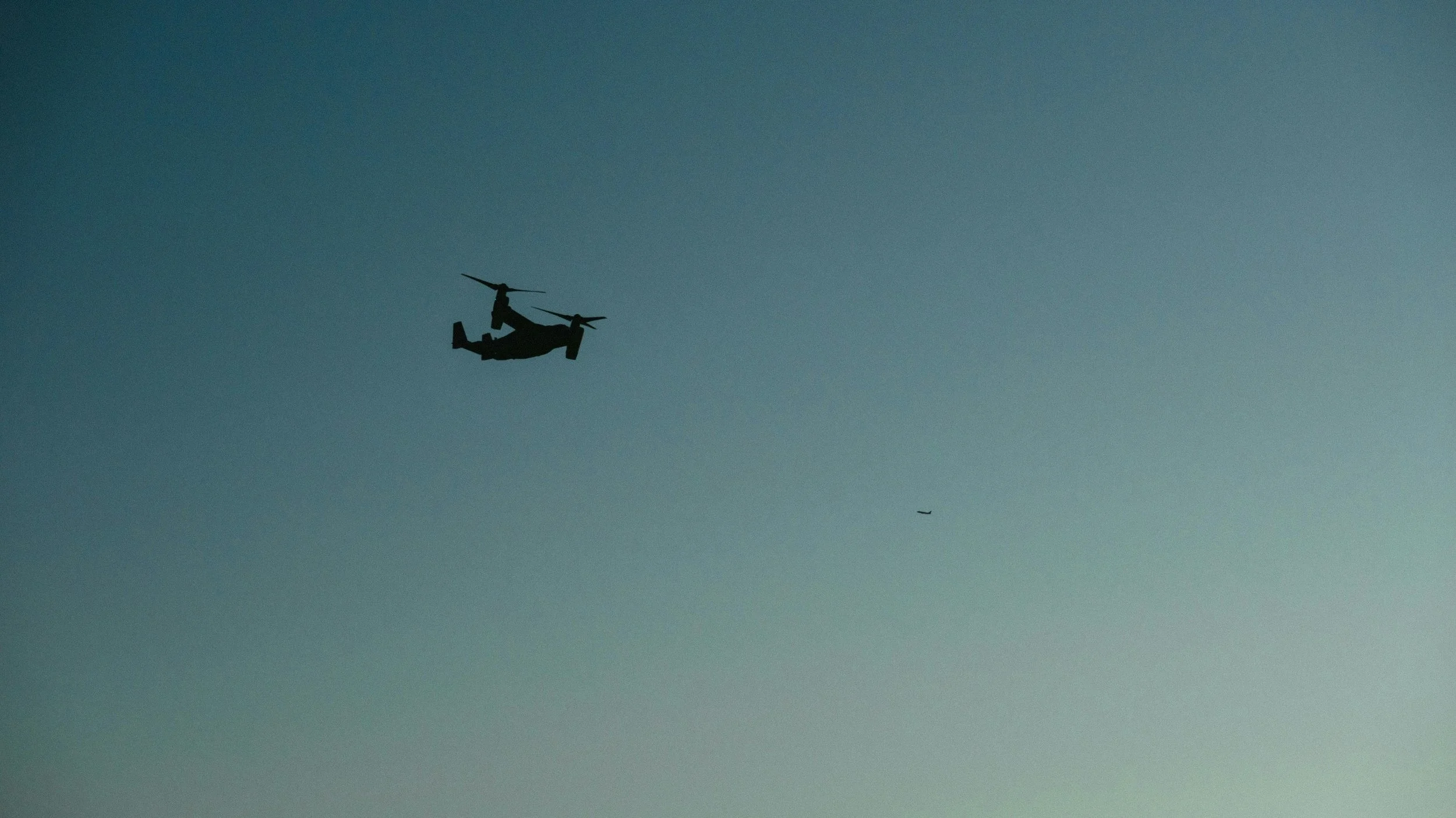

Loitering munitions ("kamikaze drones") like the Harpy and Switchblade

Unmanned ground vehicles that patrol borders or detect mines

AI-enhanced targeting systems used by air forces to reduce human error

Facial recognition drones used in surveillance and combat zones

While many are currently semi-autonomous, the push toward fully autonomous systems is accelerating.

Key Ethical Dilemmas of AI-Powered Weapons

1. Loss of Human Judgment

Can a machine truly understand the complexity of warfare—like distinguishing between a soldier and a civilian, or knowing when to show mercy?

2. Accountability

If an autonomous drone commits a war crime, who is responsible? The programmer? The military commander? The machine?

3. Escalation of Conflict

Autonomous weapons could make war more likely by reducing the political and human cost of initiating violence.

4. Bias in Targeting

AI systems can inherit racial, gender, or geopolitical biases from their training data—leading to disproportionate harm against certain groups or regions.

5. Moral Delegation

By handing life-and-death decisions to machines, we risk eroding moral responsibility in warfare.

Arguments for Autonomous Weapons

Despite the ethical risks, some argue that AI in warfare could have benefits:

Reduced human casualties: Fewer soldiers on the battlefield

Faster response times: Machines can detect and react faster than humans

Minimized emotional errors: AI doesn’t act out of fear, revenge, or panic

Precision targeting: Potentially less collateral damage—if well designed

The military-industrial complex, unsurprisingly, is investing heavily in this future.

What Does International Law Say?

Current international law—particularly the Geneva Conventions—was not designed with AI in mind. There are no binding global treaties governing the use of autonomous weapons, though many nations and NGOs are calling for one.

Organizations like the Campaign to Stop Killer Robots and the United Nations Convention on Certain Conventional Weapons (CCW) are advocating for bans or strict limits.

But key military powers—including the U.S., Russia, and China—have resisted legally binding bans.

Toward Ethical Guidelines and AI Governance in Warfare

✅ What Needs to Happen:

1. Ban Fully Autonomous Lethal Weapons

Machines should never be given the authority to kill without meaningful human oversight.

2. Require Human-in-the-Loop Systems

Decisions to use lethal force should always be subject to human review and command.

3. Establish Clear Legal Frameworks

Nations must agree on binding treaties that define what is and isn’t acceptable in autonomous warfare.

4. Transparency and Audits

Military AI systems should be subject to ethical audits, bias testing, and public accountability.

Conclusion: War Should Never Be Automated

War is one of the gravest actions a society can take. Delegating that responsibility to machines—no matter how “smart”—is not just dangerous. It’s immoral.

The development of autonomous weapons challenges our humanity. If we allow machines to kill without conscience, we risk erasing our most basic ethical lines.

The world must act now to define boundaries before it’s too late.

Next in the AI Ethics Series:

👉 Post 5: Can AI Be Truly Fair? Transparency and Accountability in Algorithms